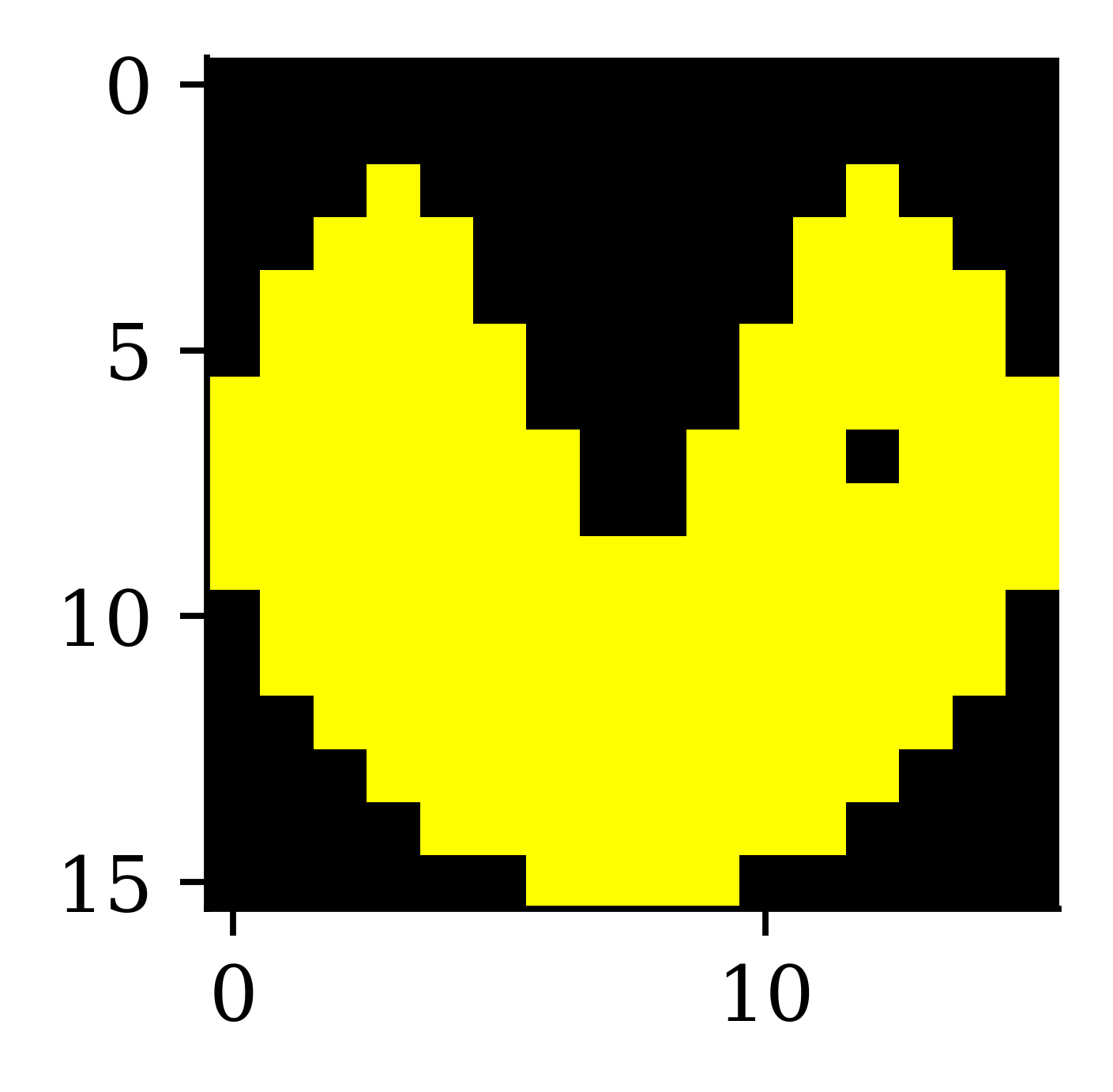

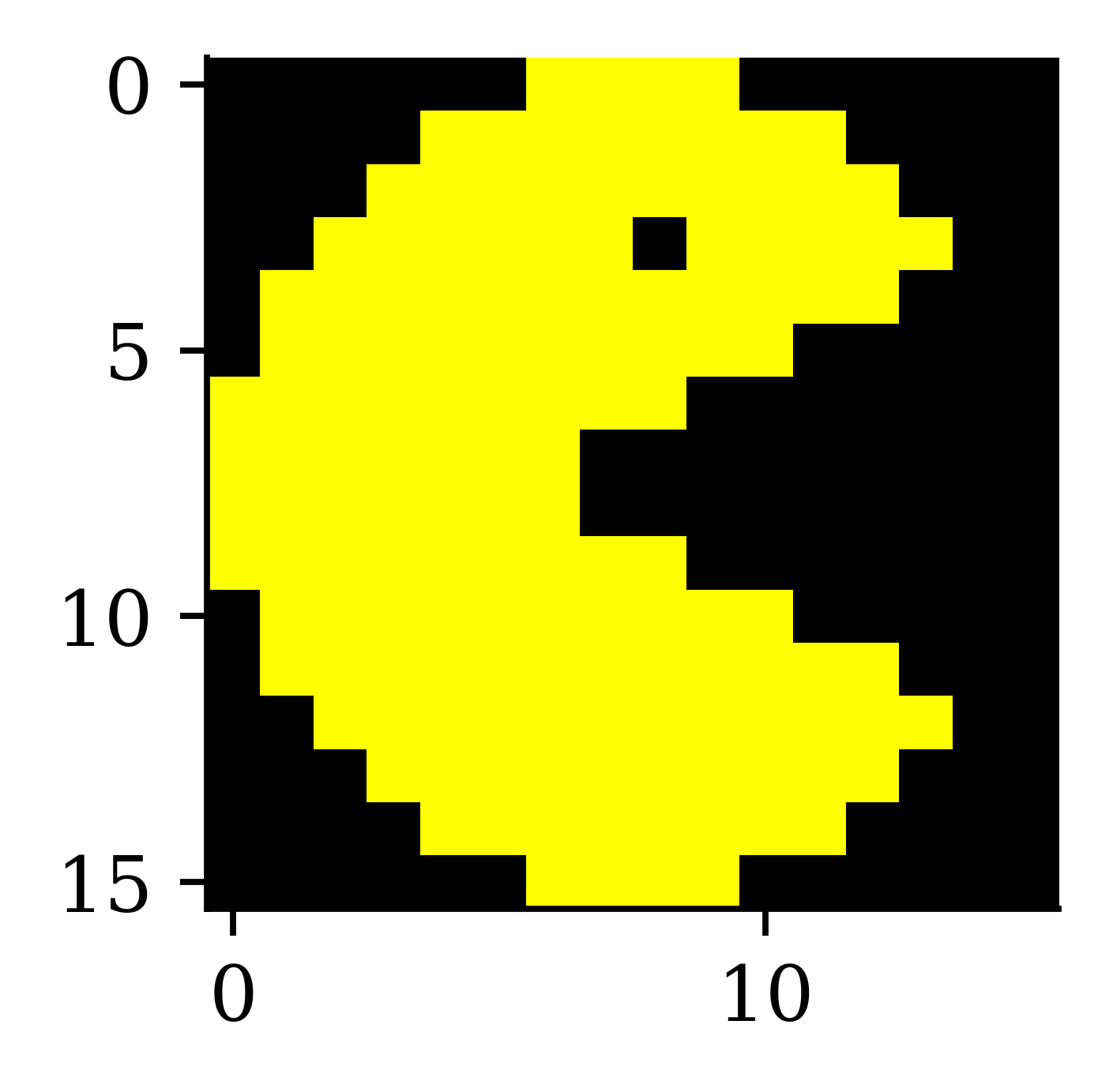

array([[[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0]],

[[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0]],

[[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0]],

[[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0]],

[[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0]],

[[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]],

[[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[255, 255, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0],

[ 0, 0, 0]]], dtype=uint8)